| By | Casey Muratori |

Huge Gaps

Those of you who don’t do game development for a living might not know what a “grass planting system” is, or even why someone needs a “system” to plant grass. After all, in the real world, grass often plants itself.

Well, despite the name, a “grass planting system” is a tool designed to give artists a way to quickly scatter geometry in a controlled way, regardless of whether it’s grass or not. It can be used for flora (grass, ferns, weeds, reeds, etc.), but also for anything else that might be scattered around a landscape, like rocks or trash. The artist typically provides a set of things to scatter, sets some parameters that control how the scattering should look, then uses the tool to scatter instances of those things around some part of the game world. This is an order of magnitude faster than having the artist place each one by hand, and realistically, things that come in large quantities like grass would be prohibitively expensive to create otherwise.

There are two distinct flavors of scattering tools that are often used in game development. One works like painting, where the artist “paints” the world with scattered geometry using a stylus to manually brush in geometry where they want it. The other flavor, which is the kind used in The Witness, is more automated. Instead of painting, the artist defines a volume where scattering should happen, then lets the tool scatter geometry inside it.

As part of fixing The Witness’s grass planting system, I received a number of feature requests from the artists, many of which I’ll cover in later articles. But the one I’m going to focus on here was a complaint that the existing system produced distributions of geometry that had “huge gaps”.

If you’ve never worked on a geometry distribution system, that statement might sound quite odd. After all, this system is supposed to be placing things randomly. Shouldn’t a random set of locations naturally produce some large gaps?

Well, it depends on what you mean by “random”. Different people use “random” to mean very different things. For example, when a cryptographer says they want something “random”, they mean “cryptographically strong”, which requires that there aren’t hidden mathematical trends in a sequence of numbers that an adversary might exploit to thwart an encryption algorithm. Likewise, in computer graphics, when we talk about “randomly” distributing things or “randomly” generating things, we are often talking about something that isn’t just any old kind of random. What we typically want is a visually pleasingly distribution.

It is a somewhat difficult concept to understand, because it has a lot to do with subtle human perception, but here’s an intuitive example: if I ask you to “randomly” arrange fifty pennies on a table, chances are you will probably distribute the pennies such that, although they are not in any regular pattern like a grid, they are nonetheless covering the table at a relatively uniform density. There probably won’t be dense clumps of pennies in some areas and very sparse areas elsewhere.

Why do people think of this particular configuration — ie., one with a relatively constant density — as a proper “random” placement of objects? If you think about it, it is actually less “random”, in some sense, because pennies placed completely “at random” in the conventional sense would be more likely to result in configurations where a few pennies did clump up next to each other, leaving holes in other areas. Humans are doing something very nonrandom when they place things this way, in fact: they are looking at and considering where each thing is relative to the other things they’ve already placed.

I don’t pretend to know why people naturally seem to do this, but I suspect is has a lot to do with people’s intuitive observations of natural phenomenon they see around them throughout their lives. When things are scattered in the world, they are usually subject to a lot of forces that simply aren’t that random. Gravity goes down, liquids and gasses spread out due to molecular forces, resource contention often causes living things to equalize coverage in an area, etc., etc. So when humans want something “randomly” scattered, what they really expect to see is actually a very specific distribution that maintains a relatively constant density.

And if that’s not what they see, they may very well complain that the distribution has “huge gaps.” Unfortunately for the programmer, throwing your hands up and saying, “but huge gaps can happen in truly random distributions!” is not generally looked upon as a reasonable response.

Always Draw Everything

Naturally, the first thing I did when looking at the “huge gaps” problem was to ask the artists for an example of the gaps they found to be “huge”. They pointed me to a particular grass system that was meant to distribute small ferns around a forest floor. The result did, in fact, seem to have gaps that were somewhat huge. But why?

I started playing around with the grass system in question, but I noticed early on that there was really no way to see what it was actually doing. I’d select it, tell it to “replant” (the Witness editor’s term for redistributing the geometry), then watch as ferns were freshly scattered around. But what did the distribution actually look like? Sure, I could see the end result, but it just looked like a bunch of clumpy ferns. What I wanted to see was where the system actually thought it was planting the ferns, if for no other reason than to make it easier to assess the quality of the distribution without having to mentally reconstruct it from looking at fern meshes.

Instead of stepping through the code or even carefully reading it to see what it was doing, I did what I often do these days and added debug visualization code right away. I’ve been through these kinds of problems enough times that I know, more often than not, if you’re going to debug one thing in a piece of code, you’re going to debug a bunch of things, so you might as well add the debug code early and leverage it throughout the whole process. Why wait for the really hard bug before adding the debug code? It takes the same amount of time to write debug code earlier as it does later, but earlier means you can use it to speed debugging of all those not-as-hard bugs along the way.

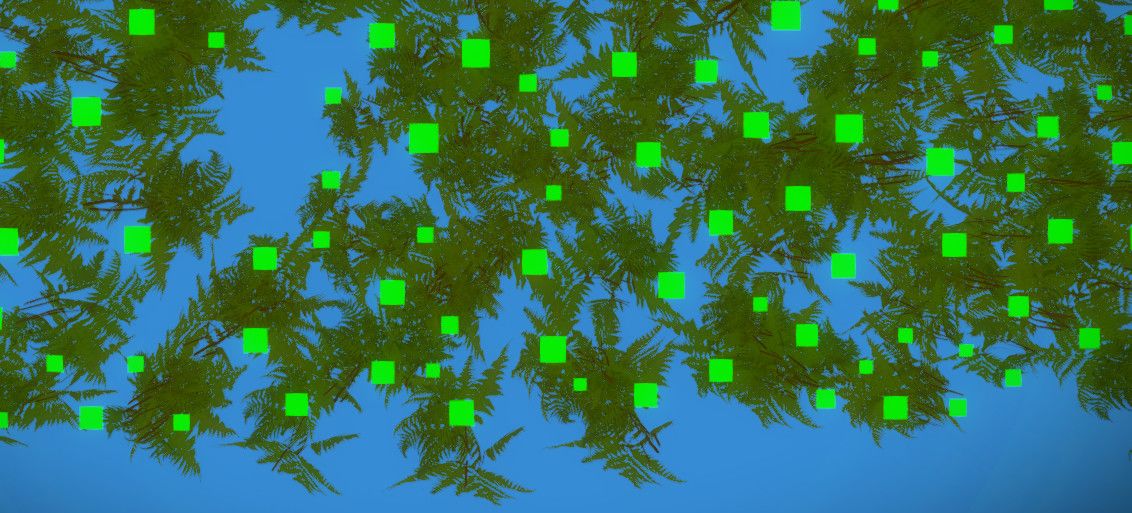

Thankfully, it was trivial to add some code that drew where the system thought it was planting the ferns, because it already maintained an array of each point it used, since it needed it for its computations. So all I had to do was draw some bright green markers (without depth occlusion) using that array. When I looked at the ferns with this debug display enabled, this is what I saw:

That’s a screenshot taken from underneath the ground, looking up at the ferns, so it’s easiest to see where the planting points are relative to the fern geometry. What jumped out at me right away was that the fern meshes themselves didn’t seem to be centered around the points where they were supposedly being placed. This can make a big difference in terms of perceived “gaps” in the geometry, because the meshes are randomly rotated when they are placed. If the mesh is off-center, then depending on how each fern was randomly rotated, it might take up much more space to its left than to its right, or vice versa. Thus, a perfectly fine distribution of planting points could look like it had a lot of gaps just because the volumes occupied by the meshes distributed on them were all offset in different directions.

If ever there was a tailor-made example of why adding debug drawing code saves a lot of time, this was it. There had been mails back and forth about this issue for quite some time before I started looking at the problem, and even though the ferns had been specifically discussed, nobody had ever noticed that they were centered incorrectly. Without enough visual information being displayed, people were free to ascribe problems to the most likely culprits. Since there were gaps in the appearance of the geometry generated by the grass system, it was only natural for people to assume it to be a problem with the distribution itself. But once enough intermediate information was displayed, it became obvious that there were other contributing factors at play.

I reported this problem to the artists, and they confirmed that the mesh had in fact been centered wrong in Maya. Fixing this improved the problem they were observing with the “huge gaps”, without me ever even touching the algorithm. Chalk up another victory to early implementation of debug drawing code.

Small Gaps

Unfortunately, even with the ferns properly centered, the distribution was still looking a bit too “gappy”. The gaps were no longer “huge”, but they were there.

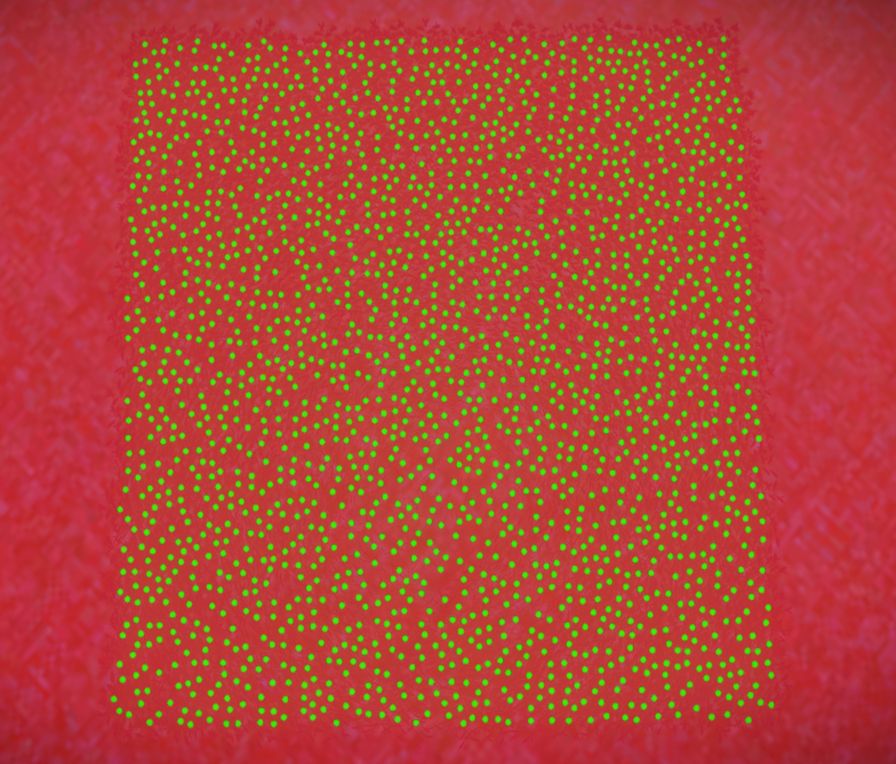

I set up a grass system in a test area so it was easier to see, since I knew I’d be doing a fair bit of work on the code, and it is always much quicker to iterate on something in a test area than in the full game environment (less load times, faster rendering, etc.). With the green markers on, a simple distribution with the grass system looked like this:

As you can see, there are a number of places that still look like “gaps”. Again, there’s nothing inherently nonrandom about these gaps, but they are still problematic for our purposes because, unless you have specifically instructed the grass system to do otherwise, the goal was for it to produce a relatively consistent density of points, and these gaps made the density appear to vary. So the next thing I had to do was take a good look at how the current scattering algorithm was working, and figure out why it wasn’t producing a consistent point density.

And that is where I will pick up this story on the next Witness Wednesday.